Bridging Trust and Privacy Gaps in Open Finance: A Collaborative Effort

IITB Trust Lab, in collaboration with Silence Laboratories, hosted an exclusive Roundtable Discussion and Workshop on Trust and Privacy Gaps in Open Finance on November 18, 2024. Bringing together key decision-makers from the finance industry, the session explored the challenges of secure data sharing within the Account Aggregator ecosystem. A focal point of the discussion was Secure Multi-Party Computation (SMPC)—a cutting-edge cryptographic approach poised to redefine data privacy by enabling computations on encrypted data without exposing sensitive information.

The workshop provided a platform to identify pressing privacy issues, explore research directions, assess SMPC’s practical applications, and discuss the regulatory and economic factors influencing adoption. With the enforcement of the Digital Personal Data Protection Act (DPDPA) on the horizon, the insights from this discussion offer a roadmap for balancing innovation, security, and compliance in the evolving financial landscape.

For a deeper understanding of the key takeaways and proposed solutions, read the full report below.

The Current Account Aggregator Ecosystem

While the Account Aggregator (AA) framework has revolutionised secure data sharing, problems persist that hinder its full potential. One of the central issues is the quality of data shared by Financial Information Providers (FIPs). For instance, variables essential for comprehensive financial analysis are occasionally found to be missing. As a concrete example, bond data or fixed deposit details are often times found to be incomplete. Another concern arises from the handling of joint accounts, where questions about whose consent is required for data sharing remain ambiguous. Furthermore, Financial Information Users (FIUs) often face slow response times from FIPs, which affects their operations and customer experience.

Despite these hurdles, the AA system has proven itself indispensable. However, there had been a lack of foresight regarding infrastructure requirements in the initial stages. This has become more apparent with the dramatic increase in data footprints recently. Current server capacities struggle to handle the growing load. FIPs are also increasingly wary of data privacy risks as the number of players in the ecosystem grows. There is also a potential discrepancy between consent specifics provided by customers and the actual handling of data upon reaching FIUs, which could easily erode trust in the system.

Incentives to enhance data privacy were also outlined. FIPs, as significant consumers of data themselves, stand to gain from better data governance. Improved data quality and access translates to better underwriting for institutions, and reduces fraud cases and minimises errors by eliminating reliance on insecure data formats like emails and PDFs, thereby benefiting individuals as well. These measures not only help honest participants but also deter malicious actors.

The looming enforcement of the Digital Personal Data Protection Act (DPDPA), adds another dimension to the conversation. While existing RBI regulations govern the conduct of AAs, their application only indirectly extends to FIPs and FIUs through their interaction with AAs. However, the interplay between AA regulations on consent specificity and the DPDPA’s privacy mandates will require careful alignment. There is a general consensus on the AA system’s transformative role and the need for sustained efforts to address its current challenges while aligning with emerging regulatory frameworks.

Secure Multi-Party-Computation

A new model proposed by Silence Laboratories aims to address some of the pressing privacy challenges within the AA ecosystem. This innovative framework incorporates a Secure Multi-Party Computation (MPC) approach to enhance privacy and security for financial data handling. Below is a short primer on MPC taken from an article co-authored by Silence Laboratories.

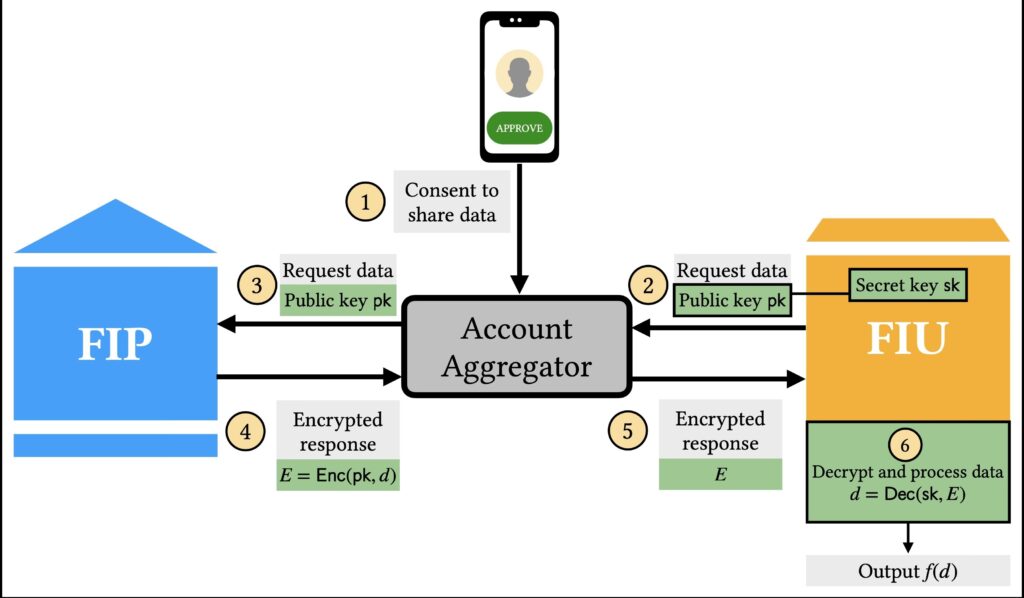

In the current system, Financial Information Users (FIUs) typically decrypt and access the complete set of data requested from Financial Information Providers (FIPs).

Fundamental cryptography—encryption, digital signatures, etc.—can be thought of as mathematically guaranteed enforcement of policies that govern access to data. This was well suited to the era of internet applications that required data to be secured only in transit and in rest. The next generation of applications, such as Open Finance, requires data to be secured while in use. This warrants the adoption of advanced cryptographic tools and Privacy Enhancing Technologies (PETs).

Multiparty Computation (MPC) is one such PETs that encompasses the extraction of utility from private data that is jointly held across different parties. In its most general usage, MPC refers to the evaluation of arbitrary functions upon such data without any individual party—or specified subsets of parties—learning any information beyond its own input, and the output of the computation. Under a well-specified non-collusion assumption, an MPC protocol that is run amongst a group of parties emulates an oracle that accepts each party’s private input, performs some computation on them, and returns private (or public) outputs to each party. Importantly, the oracle does not leak any intermediate state, or other secret information.

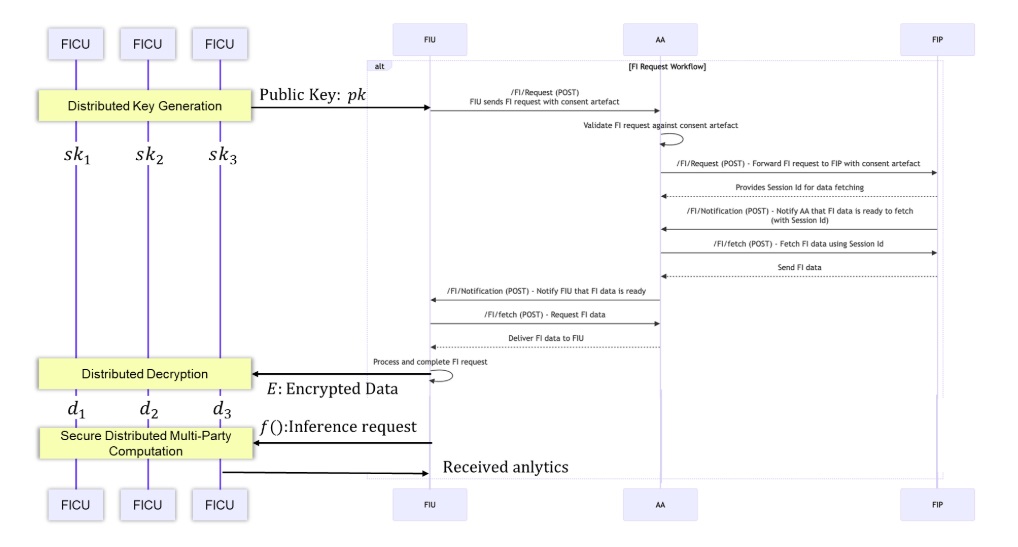

Silence Laboratories’ proposal introduces a significant shift: instead of granting a single FIU access to all the data, the ephemeral key ‘sk’ required for decryption is distributed across multiple Financial Information Compute Unit (FICU) nodes. This ensures that no single entity has unilateral access to the complete dataset, significantly improving data security and reducing the risks of misuse.

Secure MPC as a mechanism ties consent directly to computation. Unlike conventional models where consent is often tied to data transfer, this approach ensures that data is only accessible for computation that aligns with the user’s consent. This cryptographic safeguard creates a more robust system, protecting against malicious actors who might exploit weaknesses in the existing framework.

By redistributing access and strengthening security at a cryptographic level, this model addresses critical gaps in the current system, paving the way for a more secure and trustworthy AA framework.

Returning to First Principles

Before determining operational questions such as “who should be running the nodes” in the proposed model, it is imperative to get clarity on the foundational questions of the proposed model, like ‘what is it that we are trying to solve?’

At the heart of the issue is the need for citizens to trust that their data will not be misused. Misuse typically occurs in two primary forms: the first is unauthorised access, where data falls into the hands of individuals or entities that were not permitted to access it; and the second is unintended usage, where data is used for purposes beyond the original consent, such as spam calls or intrusive cross-selling campaigns.

No matter how secure any new tool or algorithm is, it alone would never be enough to drive adoption. While the technical sophistication of secure MPC may be indisputable, real-world adoption hinges on economic incentives and the legal underpinnings that govern financial institutions. Since such institutions usually operate in heavily regulated environments, they are resistant to change unless the new technology offers significant, demonstrable advantages.

Legal Aspects

Current legal frameworks, while essential, cannot be used for prototyping possible future paradigms. Additionally, and more pertinently, any regulatory standard should define objectives — what must be done — and not the methods regarding how it must be done, to allow room for innovation as technology evolves. Mandating specific standards could quickly render them obsolete, given the rapid pace of R&D in this field. A flexible framework is necessary to balance oversight with adaptability.

Economic Incentives

Some entities might be interested in secure MPC for the guarantee of security it can provide. And for custodians, a transition from one-time payments to revenue models based on API calls could prove lucrative. But many may resist due to concerns over possible compliance and implementation challenges. And the costs of deploying and maintaining this new technology being an unknown could prove to be a significant hurdle.

Taking the above into consideration, identifying a few specific use cases where the technology can demonstrate its efficacy would be ideal. By showcasing tangible value and unlocking new opportunities for market organisation, these use cases could drive adoption and illustrate the transformative potential of the technology.

The Path Forward

There is a shared recognition amongst all concerned regarding the importance of privacy-enhancing technologies (PETs) in addressing critical challenges within the financial data-sharing ecosystem. Any initiative that advances privacy and fosters meaningful conversations should be embraced, even if the journey involves complexities. The iterative and often messy nature of research and development in emerging fields is a necessary process to create modern, robust, scalable solutions.

One of the roles of cryptography is reducing reliance on trust. The future is one where cryptographic assurances could replace some of the functions currently upheld by legal structures, marking a shift from trust in organisations to trust in mathematical certainty. While this paradigm may seem theoretical and infeasible at present, it represents a direction which the ecosystem will likely gravitate towards.

Privacy and data protection are problems worth solving, and its solutions hold the potential to reimagine how data is shared and managed. But any adoption of a new technology will likely be after empirical evidence can demonstrate legal compliance and lucrative financial outcomes.